About

Hi! My name is John Green and I am a computer graphics engeneer based in the UK currently working in the video game sector. My main passion is computer graphics, but I love exploring new areas of programming and electronics to expand my knowledge of the wider field. I have currently worked on four professional projects, two of which are published so far. I have experience in several computer graphics APIs such as Vulkan, DirectX 9-12, OpenGL and some platform specific APIs.

Outside of work I continue my development by tinkering with lots of little projects to keep me on my toes and to learn more, one of which is a 2D game engine in Vulkan. I also love learning about older games consoles and have made my own Gameboy emulator (to run the game on pc) and hardware (to trick the console into reading my pcb as a cartridge).

Professional Work

Alan Wake Remastered

During my time at d3t I worked on several tasks whilst on this project. I was in charge of implementing and updating post-process passes such as motion blur, paralax mapping and velocity blur. As well as this I helped debug and optimize various areas in the game that related to computer graphics using tools such as PIX and NVidia NSight.

Alan Wake Remastered - Switch

After the launch of the main Alan wake remastered, a small team of three programers including myself ported the game from PS5 / PC to Nintendo Switch. For this project I did all the low level NVN graphics and most of the graphics based optimizations. To get this game to work on a nintendo switch many new features had to be added such as memory tracking and a multithreaded garbage collector / defragmentor

Marvel's Guardians of the Galaxy

When working on Marvel's Guardians of the Galaxy I was helping to port the title to a new platform, as well as being in charge of porting the rendering API over to Vulkan. During this project, I learned a lot of intricacies involved with switching a AAA title over to a new platform, as well as the complexities of being in charge of such a large deliverable.

Project 4 & 5 - Unreleased

I have also whilst at d3t worked on a title that is still under NDA where I was leading the rendering for the duration of the project.

Project 6 - Unreleased

Whilest working at EA - Codemasters, I have been a part of a rendering team working to get a racing title out in unreal engine. During this time I have worked deep in the engine code adding new features and fixing issues to allow this title to release and preform well

Personal Projects

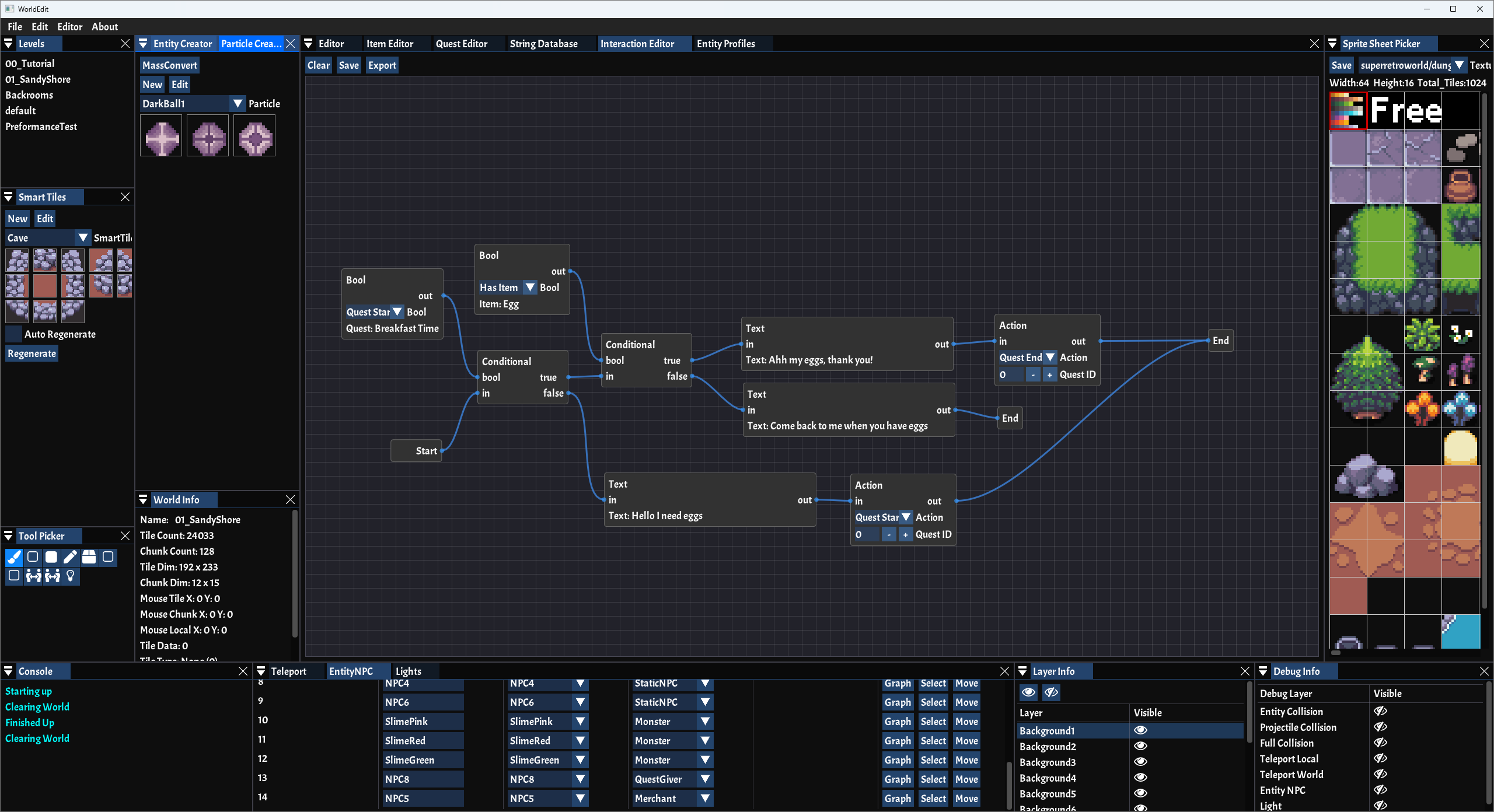

2D Game Engine

In my own time I started making a 2D game engine in Vulcan to develop an adventure game. The engine itself is made to render as efficiently as possible using various rendering techniques such as view frustum culling using compute shaders and pre made render sets to allow for zero render command list updates. The project includes a editor and a game with several libraries designed for the engine to preform maths, physics, world streaming, etc.

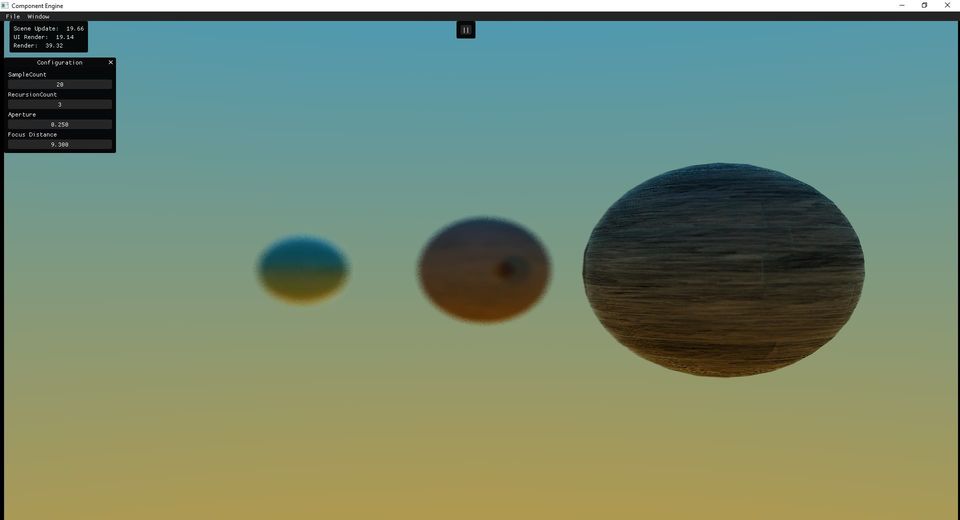

RTX Raytracer

During my time at university I took my third year project (Entity Component Game Engine) and added Vulkans RTX ray tracing to it. I developed several tools, techniques and effects during this project. Initially I was considering adding post process effects for depth of field, but using RTX, I was able to generate dof by passing the camera rays through a mathematical representation of a camera lense to generate the effect. As well as this I implemented ray traced soft and hard shadows, reflection/refraction, transparency and every material in the renderer was PBR with paralax mapping enabled.

A secondary rendering mode was added for offline video capture to allow me to record camera paths and have the RTX renderer record extremely high quality shots at 4K 60FPS when in real world time, each second, around 3-4 fps was achieved during these quality shots.

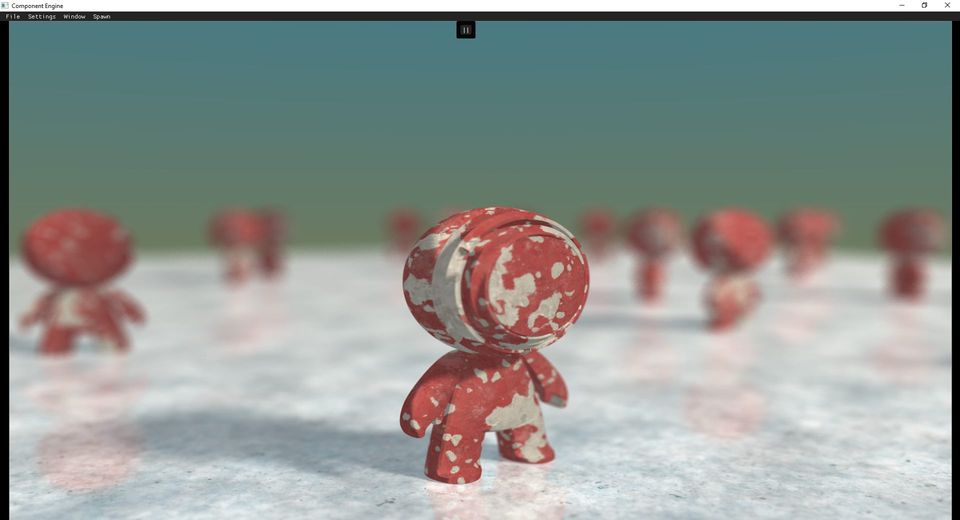

Entity Component Game Engine

For my third year project I created a Entity component game engine that used the Vulkan API as its primary rendering API. This project was a technical task to see if I could implement a fast ECS with pre generate entity lookup caches and use some vulkan API rendering techniques to tie into the ECS and enable fast, dynamic rendering and logic. One such technique I used was utilizing indirect drawing where I was able to pre generate a list of draw commands and gpu side dynamically turn them on and off based on visibility and if the component was turned on or off.

Gameboy Emulator

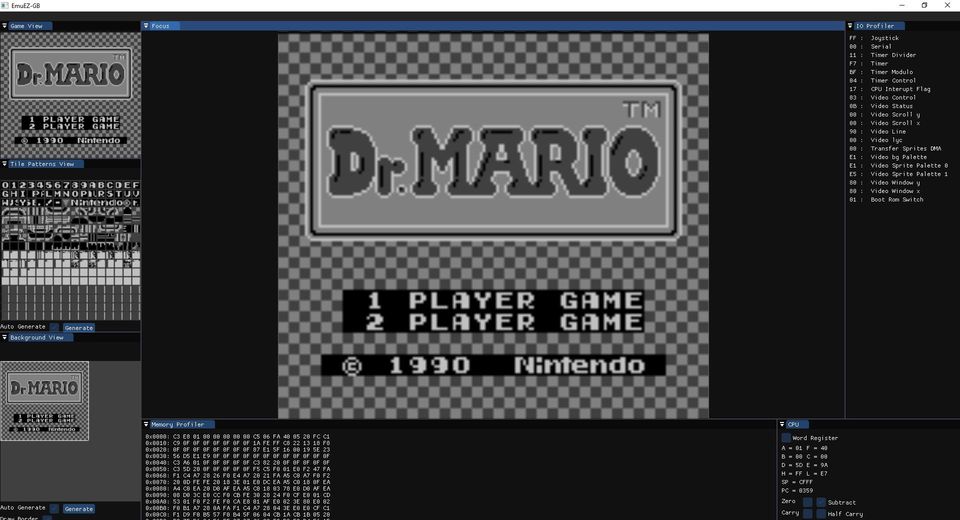

Software Emulation

Learning how computers work under the hood has always been a fascination with me and learning how emulators worked is something I always wanted to do. In this project I made a gameboy emulator in c/c++ using imgui to help debug frame data and to see how games used to work under the hood. The emulator is fully comparable with all games and all cartridge types (MBC, MBC3, MBC5, etc).

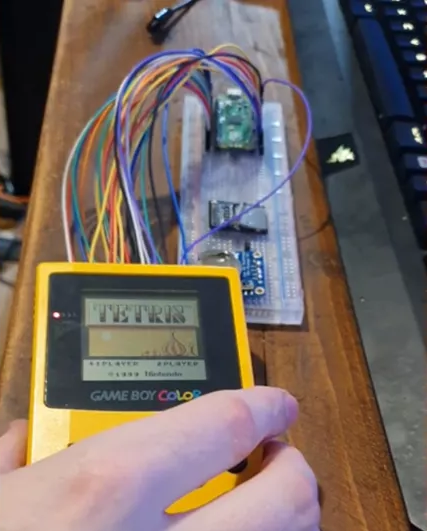

Hardware Enulation

As well as learning how the CPU worked, I wanted to see if i could trick a gameboy into accepting my own PCBs as a actual gameboy cartridge. I achieved this and published the code and information to my github and was referenced in several articles. In this I used a micro controller to read the address request pins and served up the game data when requested. I want to continue work on this in the future with the PICO-W that has built in wifi and Bluetooth as this will be a fun feature to experiment with.